1. CoinExchange Summary

This document is only applicable to the commercial full version deployment of coinexchange digital currency exchange system.

This document will be updated from time to time and some new contents will be added to strengthen the functionality of the document. Please keep in touch.

Before using this document for deployment, you need to read through the summary document I wrote on the open source project home page. And then you will have a general perspective on the whole project, so as to have a preliminary understanding of the deployment project. After all, I sometimes get annoyed with more than a dozen jar packages of microservices.

In the document, I have tried my best to use plain expression to explain some seemingly complex businesses and technologies. In fact, people with a certain technical foundation will have a certain understanding of the whole project after reading the document.

About deployment: because everyone’s technical background is different, they will encounter more or less problems beyond their own understanding in building the system. This is also a normal process. Don’t be impatient and panic. Only after such a painful and tortuous process can you have a deeper understanding of the whole project and make you comfortable with the maintenance of the system later. Similarly, you can learn a lot of things you haven’t learned before. After all, practice and doing projects are the fastest ways to learn.

About the problem: if you encounter a problem during the construction, please check it yourself (Baidu, Google). If you can’t solve it yourself, come back to me “don’t be ashamed to ask”, and I’ll analyze the possible causes of the problem for you. At present, I have encountered many problems raised by customers, of which 80% are basic problems and 10% are very low-level errors. In short, most of them are configuration problems.

About business: if you don’t know anything about the business, or even what the K line is, I say it’s difficult to guide. You’d better apply to the boss for a 10000 yuan fund, of which 200 yuan is used to fry money, which is called familiar with the business. The other 9800 yuan invites colleagues to eat hot pot and sing K. Why don’t all the 10000 yuan fund be used to fry money? Because I think if I enter the market in a state of knowing nothing, I will lose all sooner or later. Therefore, I might as well enjoy the benefits given by my boss.

Good luck! Sincerely!

[2022/02/22] Added [7. Frequently Asked Questions / Solution to the problem that the space is not released after the log is deleted without stopping the service]

[2022/02/16] Added [6. Detailed system deployment / Api documentation]

[2022/02/08] Added IOS App packaging document attachment, please refer to [System Deployment Details].

[2021/12/21] Provides solutions for major vulnerabilities in Apache Log4j, please refer to [FAQs].

[2021/11/28] Due to the upgrade of ETH London, the geth version needs to be upgraded.

[2021/07/06] Modify the ETH node construction document. Solve the problem that the block cannot be synchronized due to the hard fork of the ETH node, and the recharge cannot be monitored.

[2021/07/04] Modify the software version required to build Mongodb.

[2021/04/12] Modify geth to the latest upgraded version.

[2020/12/23] Due to the maintenance of Centos 6.x, some commands are modified for the deployment system under Centos 7.x.

2. Basic Preparations

2.1 Server configuration recommendations

The configuration that I suggest is not necessarily one that you must use. For lack of financial strength, you can reduce the allocation appropriately; If the fund strength is sufficient, it may also be allocated appropriately. Here’s my recommended server configuration and the three-party services I need: Operating System: Centos 7.x

1,Server 1

(4 cores 8G, hard disk with default 40G of cloud service provider),Web server, bandwidth 5M to 10M, or larger, for storing front-end Web resources. The server needs to have Nginx installed for domain name forwarding.

2,Server 2

(8 Core 16G, 100G Hard DiskSSD), Bandwidth 1M (native mainly for intranet), used to build Kafka, MongoDB, Redis basic services.

3, Server 3

(8 cores 16G, default hard disk size 40G), bandwidth 1M (native mainly for intranet), for deployment 00_ Microservices under framework. The server needs to install Nginx for forwarding microservices.

4, Server 4

(8-core 16G, 800G hard disk `SSD’), Bandwidth 1M (native mainly for intranet), Wallet node for deploying BTC, USDT, ETH, preferably SSD. Run wallet_ Microservices under rpc.

5, Server 5

(4-core 8G, 100G hard disk), Bandwidth 1M (native mainly for intranet), Just buy MySQL database service provided by cloud service provider directly, MySQL version requires 5.6.

6, Aliyun OSS

(default 40G, tens of dollars a year seems)

7, SMS service

the system supports a variety of SMS, but you can easily add your own SMS platform, which supports Saime by default.

8, Mail Service

(optional, the system has been modified to be registered directly through mail without verification)

Best buying advice

1, Insufficient funds

Tencent Cloud (Hong Kong, Singapore, etc.), a pay-per-volume model.

2. Sufficient funds

Aliyun (Hong Kong, Singapore, Germany, United States, etc.), year-round package month mode.

Summary

These are the server configurations and three-party services you need. In fact, all microservices and software like Kafka can run on one server, but in a production environment, I recommend using multiple servers, mainly to isolate data, such as wallet nodes and basic software like Kafka, and services (various jar packages) from data. In fact, MySQL databases recommend more stable cloud services, and of course you can also build MySQL yourself (which saves costs to some extent, but increases maintenance costs).

2.2 Environment and Software Version

Local development environment

- Node V10.15.1

- NPM V6.4.1

- JDK V1.8.0_131

- IDEA 2019.2 Ultimate Edition

- Xcode 11.5

Server deployment environment

- Centos 7.6

- Java openjdk version “1.8.0_212”

- Kafka 2.11-2.2.1

- Zookeeper 3.4.14

- Mongodb 4.0.19

- Redis 3.2.12

- MySQL 5.6

- ETH 1.10.2

- BTC/USDT 0.11.0

2.3 Installation of Java(The most concise way)

Because it’s a microservice program that runs on Java, you need to install the Java environment (which feels like a piece of rubbish…). Installing Java on Linux is a simple matter. Here’s an example of installing Java on `Centos 7.x’.

1, Check if the system has Java

[ root@VM -0-12-centos ~]# Java

-bash: java: command ~ found

[ root@VM -0-12-centos ~]# java-v

-bash: java: command not found

[ root@VM -0-12-centos ~]# java-version

-bash: java: command not found

Unfortunately, you cannot execute Java commands, let alone run jar packages.

2, Yum View Installable Java Packages

[ root@VM -0-12-centos usdt]# Yum list java*

Installable Packages

Java-1.5.0-gcj.x86_ 64 1.5.0.0-29.1.el6 OS

Java-1.5.0-gcj-devel.x86_ 64 1.5.0.0-29.1.el6 OS

Java-1.5.0-gcj-javadoc.x86_ 64 1.5.0.0-29.1.el6 OS

Java-1.5.0-gcj-src.x86_ 64 1.5.0.0-29.1.el6 OS

Java-1.6.0-openjdk.x86_ 64 1:1.6.0.41-1.13.13.1.el6_ 8 OS

Java-1.6.0-openjdk-demo.x86_ 64 1:1.6.0.41-1.13.13.1.el6_ 8 OS

.....................

The console outputs many packages that can be used for installation. We chose 1.8 packages as follows:

Java-1.8.0-openjdk.x86_ 64 1:1.8.0.252.b09-2.el6_ 10 OS

The installation commands are as follows:

[ root@VM -0-12-centos ~]# Yum install java-1.8.0-openjdk.x86_ 64

After waiting for the installation to complete, enter the following command:

[ root@VM -0-8-centos ~]# Java

Usage: Java [-options] class [args...]

(Execution Class)

Or Java [-options] -jar jarfile [args...]

(Execute jar file)

Options include:

-d32 uses a 32-bit data model (if available)

-d64 uses a 64-bit data model (if available)

-server Select "server" VM

The default VM is server.

Because you are running on a server-like computer.

-cp <class search path for directories and zip/jar files>

-classpath <class search path for directories and zip/jar files>

Use: Separated directories, JAR files

And a list of ZIP files to search for class files.

.....................

Now you can use java to run some jar packages:

[ root@VM -0-8-centos exchange]# ll

Total dosage 558484

-rw-r--r-- 1 root 40721465 August 01:04 cloud.jar

-rw-r--r-- 1 root 105173026 August 01:05 exchange-api.jar

-rw-r--r-- 1 root 105180109 August 01:05 exchange.jar

-rw-r--r-- 1 root 106993131 August 01:05 market.jar

-rw-r--r--1 root 109531189 August 4 23:20 ucenter-api.jar

-rw-r--r-- 1 root 103676574 August 01:05 wallet.jar

[ root@VM -0-8-centos exchange]# java-jar cloud.jar2.4 Installation and Use of screen

Some people like to use the nohub command to perform background tasks, others like to use the screen to perform “background tasks”. There is no big difference between the two, and they can run happily. It seems to me that there may be a difference in the way you view the logs. The jar service that the nohub command runs requires you to switch to the log directory with the CD command and view the logs separately with commands like tail-f-n 50'; The jar service running screen, on the other hand, may only needscreen-r xxxx` to see the real-time log.

Introduction

Screen is a free software for command line terminal switching developed by the GNU program. The software allows users to connect multiple local or remote command line sessions at the same time and switch freely between them. GNU Screen can be seen as the command line interface version of the window manager. It provides a unified interface and corresponding functionality for managing multiple sessions. In a Screen environment, all sessions run independently with their own number, input, output, and window cache. Users can switch between different windows through the shortcut keys, and can freely redirect the input and output of each window.

Installation

[ root@VM -0-8-centos ~]# Yum install screen.x86_ 64

Basic Syntax

$> screen [-AmRvx-ls-wipe][-d <job name>][-h <row number>][-r <job name>][-s][-S <job name>]

-A All windows are resized to the current terminal size.

-d <Job Name>Take the specified screen job offline.

-h <rows>Number of buffer rows for the specified window.

-m Forces the creation of a new screen job even if the screen job is currently in the job.

-r <Job Name>Resume offline screen jobs.

-R first attempts to resume offline jobs. If no offline jobs are found, a new screen job is created.

-s Specifies the shell to execute when a new window is created.

-S <Job Name>Specify the name of the screen job.

-v Displays version information.

-x Resumes previous offline screen jobs.

-ls or--list displays all current screen jobs.

-wipe checks all current screen jobs and deletes those that are no longer available

Common screen parameters

Screen-S yourname ->Create a new session called yourname

Screen-ls - > List all current sessions

Screen-r yourname ->Go back to yourname this session

Screen-d yourname ->remote detach a session

Screen-d-r yourname ->End the current session and return to yourname as the session

Keyboard directives (C-a = Ctrl + A)

C-a? -> Show all key binding information

C-a C ->Create a new window to run the shell and switch to it

C-a n -> Next, switch to the next window

C-a P -> Previous, switch to the previous window

C-a 0..9 -> Switch to 0..9 windows

Ctrl+a [Space] ->Switch from Window 0 to Window 9 in sequence

C-a C-a ->Switch between two recently used windows

C-a x ->Lock the current window and unlock it with the user password

C-a D -> detach, temporarily leaving the current session, drops the current screen session (which may contain multiple windows) into the background and returns to the state before the screen has entered. In the screen session, the process running inside each window (foreground/background) continues to execute, even logout does not affect it.

C-a Z ->Put the current session in the background to execute, and use the shell's FG command to go back.

C-a w ->Show a list of all windows

C-a t -> time, showing current time, and system load

C-a K -> kill window, force the current window to close

C-a [-> enters copy mode, where you can roll back, search, copy as if you were using VI

C-b Backward, PageUp

C-f Forward, PageDown

H (upper case) High, move cursor to upper left corner

L Low, move the cursor to the lower left corner

0 to the beginning of the line

$End of line

W forward one word, moving forward in words

B backward one word, moving backward in words

Space first press as the start of the marker area and second press as the end point

Esc End Copy Mode

C-a] -> paste, paste the selection just made in copy mode

Sample Operation

[ root@VM -0-8-centos exchange]# screen-S cloud

New Session

[ root@VM -0-8-centos exchange]#java-jar cloud.jar

Run a java program Ctrl + A + D Suspend the session (the Java program inside is still running)

[ root@VM -0-8-centos exchange]# screen-ls

There are screens on:

8776.wallet (Detached)

7231.exchangeapi (Detached)

1594.cloud (Detached)

2270.exchange (Detached)

6253.market (Detached)

8002.ucenter (Detached)

6 Sockets in/var/run/screen/S-root.

View Session List

[ root@VM -0-8-centos exchange]# screen-r cloud

Return to Session

2.5 nohub Command Practice

Readable for beginners, skipped by experts, I am afraid to delay your golden youth!

Background

Processes can be run in the background, where background refers to the background of the current landing terminal. In this case, when we execute a background command on a remote terminal in a way that remotely manages the server, if we exit the login before the command has been executed, will the background command continue to execute? Of course not, the execution of this command will be interrupted. This raises the question, how do we do this if we do need to execute some background commands on a remote terminal? The nohub command is one of the solutions! Of course… if you like to keep a conversation with screen, then you don’t need nohub! But if `you think you’re particularly cozy’, you can choose to mix the two and use them until you’re all upset.

Introduction

The nohup command is designed to allow background work to execute correctly in the background when it leaves the operation terminal. The basic format of this command is as follows:

[ root@localhost ~]# nohup [command] &

Note that’&’here means the command will work in the terminal background; Conversely, if’&’is not present, this command will work in the terminal foreground. For example:

[ root@localhost ~]# nohup find / -print >/root/file.log &

[3] 2349

#Use the find command to print/print all files below. Place in Background Execution

[ root@localhost ~]# nohup: Ignore input and append output to "nohup.out"

[ root@localhost ~]# nohup: Ignore input and append output to "nohup.out"

#with hints

Next, do it quickly or the find command will end. We can then log out, log back in, execute the “ps aux” command, and find that the find command is still running. If the find command executes too quickly, we can write a circular script and execute it using the nohup command. For example:

[ root@localhost ~]# VI for.sh

#!/ Bin/bash

For ((i=0; i<=1000; i=i+1))

#Loop 1000 times

Do

Echo 11 >>/root/for.log

#Write 11 in the for.log file

Sleep 10s

#Sleep 10 seconds per cycle

Done

[ root@localhost ~]# Chmod 755 for.sh

[ root@localhost ~]# nohup/root/for.sh &

[1] 2478

[ root@localhost ~]# nohup: Ignore input and append output to "nohup.out"

#Execute script

Next, exit the login, and after re-login, the script can still be seen through the “ps aux” command.

Project Practice

Having learned about the nohub command, have you thought about running jar packages in the background?

[ root@localhost ~]# nohub java-jar exchange.jar

You might ask, how do I view the log?

Depending on where the jar you are running will place the log by default, open the Java microservice configuration file logback-spring.xml, and you will see the following configuration: <FileNamePattern>/logs/ucenter/%d{yyyy-MM-d d}/%d{yyy-MM-d d}.%i.log </FileNamePattern> Now you know where the log is. The commands to view the log are as follows:

[ root@VM -0-8-centos 2020-08-01]# cd/logs/ucenter/2020-08-05/

[ root@VM -0-8-centos 2020-08-05]#ll

Total usage 148

-rw-r--r--1 root 144189 August 51:22 2020-08-05.0.log

[ root@VM -0-8-centos 2020-08-05]# tail-f-n 10 2020-08-05.0.log

01:22:50.412 [pool-8-thread-1] INFO com.bizzan.bitrade.job.CheckExchangeRate - remote call:url= http://bitrade-market/market/exchange-rate/usd-cny

01:22:50.414 [pool-8-thread-1] INFO com.bizzan.bitrade.job.CheckExchangeRate - remote call:url= http://bitrade-market/market/exchange-rate/usd/ {coin}, unit=BTC

01:22:50.414 [pool-8-thread-1] INFO com.bizzan.bitrade.job.CheckExchangeRate - remote call:url= http://bitrade-market/market/exchange-rate/usd/ {coin}, unit=BSV

01:22:50.415 [pool-8-thread-1] INFO com.bizzan.bitrade.job.CheckExchangeRate - remote call:url= http://bitrade-market/market/exchange-rate/usd/ {coin}, unit=BCH

01:22:50.416 [pool-8-thread-1] INFO com.bizzan.bitrade.job.CheckExchangeRate - remote call:url= http://bitrade-market/market/exchange-rate/usd/ {coin}, unit=EUSDT

01:22:50.416 [pool-8-thread-1] INFO com.bizzan.bitrade.job.CheckExchangeRate - remote call:url= http://bitrade-market/market/exchange-rate/usd/ {coin}, unit=XRP

01:22:50.417 [pool-8-thread-1] INFO com.bizzan.bitrade.job.CheckExchangeRate - remote call:url= http://bitrade-market/market/exchange-rate/usd/ {coin}, unit=EOS3. Basic Software Installation

3.1 Install and Configure of Mongodb

Basic software, you can install on any better performance server (such as 8-core 16G), and the hard disk should not be too small, preferably around 100G, because there will be frequent reads and writes, it is recommended to use SSDhard disk

The deployment I’m demonstrating here is single machine, and you can also choose cluster deployment, which can greatly improve performance, but requires more servers to support cluster deployment.

Mongodb is mainly used to store market data, wallet recharge records, and robot configuration.

Install MongoDB

1, Download &Start MongoDB

wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_ 64-rhel62-4.0.19.tgz tar-xvf mongodb-linux-x86_ 64-rhel62-4.0.19.tgz /data/mongodb/directory new file mongodb.conf cd enters the bin directory of mongodb and executes:. /mongod-f/data/mongodb/mongodb.conf

2, Test Mongodb:

cd to the bin directory, execute the connection command:. /mongo 127.0.0.1:27017

3, Configuration file example (mongodb.conf):

Dbpath=/data/mongodb/data

Logpath=/data/mongodb/log/mongodb.log

Logappend = true

Port=27017

Fork=true

Auth=true

Bind_ Ip=0.0.0.0

Create collections and accounts

1, Create Administrator Account

Use admin

Db.createUser ({user:'root', pwd:'123456789', roles: [{role:'userAdminAnyDatabase', db:'admin'}})

Db.auth ("root", "123456789")

2, Create Quote Collection & Account

Use bitrade

Db.createUser ({user:'bizzan', pwd:'123456789', roles: [{role:'dbOwner', db:'bitrade'}})

3, Create Wallet Collection & Account

Use Wallet

Db.createUser ({user:'bizzan', pwd:'123456789', roles: [{role:'dbOwner', db:'wallet'}], mechanisms: ['SCRAM-SHA-1']})

4, Create Robot Collection & Account

Use robot

Db.createUser ({user:'bizzan', pwd:'123456789', roles: [{role:'dbOwner', db:'robot'}})

Db.auth ("bizzan", "123456789")3.2 Zookeeper and Kafka

Note: This document is a single-machine installation of zookeeper and kafka. If you need a multi-server deployment, please refer to the official documentation configuration. Kafka relies on Zookeeper. As to what Zookeeper does, I think it should be a basic question, Google it by yourself.

Install zookeeper

1, download zookeeper (software version: zookeeper-3.4.14.tar.gz)

2, copy to / data/kafka/directory, tar-xvf decompression

Tar-xvf zookeeper-3.4.14.tar.gz

3, new zookeeper data directory/data/kafka/zookeeper

MKDIR zookeeper

4, Modify conf/zoo_ Sample.cfg file, renamed zoo.cfg

Upload zoo.cfg to the conf folder

5, Command line start:

screen-S zookeeper . /zkServer.sh start-foreground

6, Test Connection

Bin/zkCli.sh-server 10.140.0.12:2181

Configuration file example (zoo.cfg):

TickTime=2000

InitLimit=10

SyncLimit=5

DataDir=/data/kafka/zookeeper

ClientPort=2181

Install Kafka

1, download Kafka (software version: kafka_2.11-2.2.1.tgz)

2, upload to / data/kafka/kafka/directory, tar-xvf decompression

3, New log folder: /data/kafka/kafka/log, change permission Chmod 777 log

4, modify conf/server.properties configuration file

Modification point: advertised.listeners==PLAINTEXT://native IP:9092

5, command line start:

screen-S Kafka bin/kafka-server-start.sh config/server.properties

Configuration file example (server.properties):

Broker.id=1

############################# Socket Server Settings ###########################

Listeners=PLAINTEXT://:9092

Advertised.listeners=PLAINTEXT://172.19.0.6:9092

Num.network.threads=3

Num.io.threads=8

Socket.send.buffer.bytes=102400

Socket.receive.buffer.bytes=102400

Socket.request.max.bytes=104857600

############################### Log Basics ######################################

Log.dirs=/data/kafka/log

Num.partitions=1

Num.recovery.threads.per.data.dir=1

############################# Internal Topic Settings #########################

Offsets.topic.replication.factor=1

Transaction.state.log.replication.factor=1

Transaction.state.log.min.isr=1

######################### Log Retention Policy #################################

Log.retention.hours=168

Log.segment.bytes=1073741824

Log.retention.check.interval.ms=300000

############################# Log Retention Policy #############################

Zookeeper.connect=localhost:2181

Zookeeper.connection.timeout.ms=6000

Session.timeout.ms=60000

############################# Group Coordinator Settings #########################

Group.initial.rebalance.delay.ms=03.3 Redis

1. Install redis

yum install redis -y

2. Modify profile

Copy the redis.conf file to the / etc / directory. The password in the configuration file can be modified: requirepass (default: 123456789)

3. Start redis:

redis-server /etc/redis.conf

Configuration file example (redis. CONF):

protected-mode no

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize yes

supervised no

pidfile /var/run/redis_ 6379.pid

loglevel notice

logfile /var/log/redis/redis.log

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /var/lib/redis

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

requirepass 123456789

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes3.4 MySQL

Compatible version: 5.6 / 5.7 Configuration suggestions: 4-core 8G, data disk 40G

About self built MySQL service

It is recommended to purchase cloud MySQL service.

It is OK for someone to build MySQL on the server, but how to say, the operation and maintenance of a set of MySQL is not as simple as you think, especially for the system with high stability requirements such as the exchange.

If you build it yourself, you will be deceived if you encounter any problems. But if you have 5 to 10 years of DBA experience, you can build it yourself; If you are just an engineer who has developed MySQL or worked on MySQL in your spare time, I suggest you don’t build it yourself.

Tips:When building mysql, pay attention to selecting utf8 for character set and UTF8 generic for sorting.

Import Data

Import two scripts:

- db.sql

- data.sql

4. Configuration of Nginx

4.1 Nginx-Microservices forwarding

This nginx is installed on the server where the microservice under the framework is located.

Installing nginx

yum install nginx.x86_ 64

Configuration file and Resource Directory:

After installation, the default directory of nginx is as follows:

Configuration file directory: / etc / nginx / conf.d Resource file directory: / usr / share / nginx / HTML/

Modify profile

Upload configuration file: default.conf

Start nginx

service nginx start/stop

Configuration file example (default. CONF)

server {

listen 8801;

server_name localhost;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

server_name locahost;

location /market {

client_max_body_size 5m;

proxy_pass http://localhost:6004 ;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Scheme $scheme;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location /exchange {

client_max_body_size 5m;

proxy_pass http://localhost:6003 ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

location /uc {

client_max_body_size 5m;

proxy_pass http://localhost:6001 ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

location /admin {

client_max_body_size 5m;

proxy_pass http://localhost:6010 ;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

location /chat {

client_max_body_size 5m;

proxy_pass http://localhost:6008 ;

proxy_set_header Host $host;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header X-Real-IP $remote_addr;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}4.2 Nginx- Domain Forwarding

This Nginx is installed on the Web server.

Dependent Environment

yum install-y wget yum install-y vim-enhanced yum install-y make C make GCC gcc-c++ yum install-y PCRE pcre-devel yum install-y zlib zlib-devel yum install-y OpenSSL openssl-devel

1、Download Nginx

wget http://nginx.org/download/nginx-1.12.2.tar.gz

2、 Compile Installation

tar-zxvf nginx-1.12.2.tar.gz cd nginx-1.12.2 . /configure -- prefix=/usr/local/nginx -- pid-path=/var/run/nginx/nginx.pid -- lock-path=/var/lock/nginx.lock -- error-log-path=/var/log/nginx/error.log -- http-log-path=/var/log/nginx/access.log -- with-http_p_log Gzip_ Static_ Module -- http-client-body-temp-path=/var/temp/nginx/client -- http-proxy-temp-path=/var/temp/temp/proxy -- http-fastcgi-temp-path=/var/temp/nginx/fastcgi -- http-uwsgi-temp-path=/var/temp/inx/uwsgi --with-http_temp Stub_ Status_ Module --with-http_ Ssl_ Module --http-scgi-temp-path=/var/temp/nginx/scgi --with-stream --with-stream_ Ssl_ Module make make install

3、upload the SSL certificate to / etc/nginx/ssl/directory, and apply for free certificates for two domain names:

www.xxx.com Api.xxx.com

4、Modify Configuration File

Reference examples

5、 start nginx

Enter/usr/local/nginx/sbin/, execute . /nginx After modifying the profile, overload the profile . /nginx-s reload

Profile Reference (nginx.conf):

#user nobody;

Worker_ Processes 2;

#error_ Log logs/error.log;

#error_ Log logs/error.log notice;

#error_ Log logs/error.log info;

PID logs/nginx.pid;

Events {

Worker_connections 1024;

}

#Listen on socket port

Stream{

Upstream market_server{

Hash $remote_addr consistent;

Server 172.19.0.8:28901;

}

Server {

Listen 28901;

Proxy_pass market_server;

}

Upstream otc_heart_server{

Hash $remote_addr consistent;

Server 172.19.0.8:28902;

}

Server {

Listen 28902;

Proxy_pass otc_heart_server;

}

}

Http {

Include mime.types;

Default_type application/octet-stream;

Sendfile on;

Keepalive_timeout 65;

Server {

Listen 80;

Server_name api.xxxx.com;

Rewrite ^(. *)$https://$host$1 permanent;

Location / {

Add_header'Access-Control-Allow-Origin''*' always;

Proxy_pass http://172.19.0.8:8801 ;

Proxy_set_header Host $http_host;

Proxy_set_header X-Real-IP $remote_addr;

Proxy_set_header X-Scheme $scheme;

Proxy_set_header Upgrade $http_upgrade;

Proxy_set_header Connection "upgrade";

Break;

Gzip on;

Gzip_http_version 1.1;

Gzip_comp_level 3;

Gzip_types text/plain application/json application/x-javascript application/css application/xml application/xml+rss text/javascript application/x-httpd-php image/jpeg image/gif image/png image/x-ms-bmp;

}

}

Server {

Listen 80;

Server_name www.xxxx.com;

#charset koi8-r;

#access_log logs/host.access.log main;

Rewrite ^(. *)$https://$host$1 permanent;

Location / {

Root html;

Index index.html index.htm;

Try_files $uri $uri/ /index.html;

Gzip on;

Gzip_http_version 1.1;

Gzip_comp_level 3;

Gzip_types text/plain application/json application/x-javascript application/css application/xml application/xml+rss text/javascript application/x-httpd-php image/jpeg image/gif image/png image/x-ms-bmp;

}

Location ~. *. (eot | TTF | TTC | OTF | EOT | woff | woff2 | svg) (. *) {

Add_header Access-Control-Allow-Origin http://www.xxxx.com ;

}

# redirect server error pages to the static page/50x.html

#

Error_page 500 502 503 504/50x.html;

Location = /50x.html {

Root html;

}

}

Server {

Listen 80;

Server_name xxxx.com;

Rewrite ^(. *)$https://$host$1 permanent;

#charset koi8-r;

#access_log logs/host.access.log main;

Location / {

Root html;

Index index.html index.htm;

Try_files $uri $uri/ /index.html;

}

Location ~. *. (eot | TTF | TTC | OTF | EOT | woff | woff2 | svg) (. *) {

Add_header Access-Control-Allow-Origin http://www.xxxx.com ;

Gzip on;

Gzip_http_version 1.1;

Gzip_comp_level 3;

Gzip_types text/plain application/json application/x-javascript application/css application/xml application/xml+rss text/javascript application/x-httpd-php image/jpeg image/gif image/png image/x-ms-bmp;

}

# redirect server error pages to the static page/50x.html

#

Error_page 500 502 503 504/50x.html;

Location = /50x.html {

Root html;

}

}

Server {

Listen 443 ssl;

Server_name www.xxxx.com;

SSL on;

Ssl_certificate/etc/nginx/ssl/1_www.xxxx.com_bundle.crt;

Ssl_certificate_key/etc/nginx/ssl/2_www.xxxx.com.key;5. Deployment of Wallet

5.1 BTC and USDT Node

1, mount 800G hard disk to server

When building a block chain node, you need to mount a large capacity hard disk. First mount the hard disk through the cloud console, and then enter the SSH command line interface:

View a list of available hard drives:

fdisk-l

Format the hard disk:

mkfs.ext4/dev/vdb

Mount the hard disk into the /data directory:

mount/dev/vdb/data

2, Download&Install&Configure the Omni Runtime Package

Download address:

Example commands are as follows:

cd/data mkdir usdt cd usdt wget https://github.com/OmniLayer/omnicore/releases/download/v0.11.0/omnicore-0.11.0-x86_64-linux-gnu.tar.gz

Create Block Data Directory:

mkdir data

Create a configuration file:

touch bitcoin.conf

Enter the omni program directory:

cd/data/usdt/omnicore-0.5.0/bin/

Startup node (run as nohub or screen):

. /omnicored-conf=/blockchain/usdt/bitcoin.conf-reindex The console prompts are as follows:

2019-08-13 03:20:56 Initializing Omni Core v0.5.0 [main]

2019-08-13 03:20:56 Loading trades database: OK

2019-08-13 03:20:56 Loading send-to-owners database: OK

2019-08-13 03:20:56 Loading TX meta-info database: OK

2019-08-13 03:20:56 Loading Smart Property database: OK

2019-08-13 03:20:56 Loading master transactions database: OK

2019-08-13 03:20:56 Loading fee cache database: OK

2019-08-13 03:20:56 Loading fee history database: OK

2019-08-13 03:20:56 Loading persistent state: NONE (no usable previous state found)

2019-08-13 03:20:56 Omni Core initialization completed

View synchronization progress:

. /omnicore-cli-rpcconnect=127.0.0.1-rpcuser=bizzan-rpcpassword=123456789-rpcport=8334 getblockchaininfo The message to return to the console is as follows:

{

"Chain": "main"

"Blocks": 295978,

"Headers": 589877,

"Bestblockhash": "0000000000000060a02b55752 c56edeeafe25a47c1abfdb65468bf1e5c985".

"Difficulty": 6119726089.128147,

"Mediantime": 1397562780,

"Verificationprogress": 0.06151967312105129,

"Chainwork": "000000000000000000000000000000000000000000003fb9da1c8bfa17a8f117".

Configuration file example (bitcoin.conf):

#Data Storage Directory (this is the full path to the data storage path established above)

Datadir=/data/usdt/data

#Use test network (0: official network, 1: test network)

Testnet=0

#Tell Bitcoin-Qt and bitcoind to accept JSON-RPC commands (whether to enable commands and accept RPC services)

Server=1

#Set gen=1 to try bitcoin mining

Gen=0

#Enable Transaction Index

Txindex=1

#Background execution (whether or not)

Daemon=0

#Listen for RPC links, official default port 8333 tests default 18333 (best set, not unclear)

Rpcport=8333

#RPC service account and password, if not set there is a default password, this article does not go into the default, set directly with their own

Rpcuser=bizzan

Rpcpassword=123456789

#Allow those IPs to access RPC interfaces. By default, all IPs are accessible. Please change to your own IP address

Rpcallowip=0.0.0.0/0

Rpcconnect=127.0.0.15.2 ETH Node

Notice

This article only tells you the basic operations, because the version of the blockchain node is constantly being updated, and it is impossible for me to unanimously modify the version number in this document. You can download the latest version from the official website and follow the steps in this article.

Steps

6. Detailed explanation of system

6.1 Configuration modification of Java

If you read through the documentation on the home page of my project, you should know from the schema diagram what each Java microservice does. Here are just suggestions for configuration modifications that you can handle with flexibility.

Profile:

application.properties This file is located in the resource directory of each Java module. (For people who know the SpringBoot development, this is nonsense, but it’s written to prevent people from still knowing it.)

Modify:

Eureka.client.serviceUrl.defaultZone= http://172.19.0.8:7000/eureka/

Change the IP address of this configuration to the IP address of the server where the Cloud.jar file runs

Spring.datasource.url=jdbc: mysql://172.19.0.5:3306/bizzan?characterEncoding=utf -8&serverTimezone=GMT%2B8&useSSL=false

Spring.datasource.driver-class-name=com.mysql.jdbc.Driver

Spring.datasource.username=bizzan

Spring.datasource.password=123456789

Clearly, there is no need to say more about the configuration of the database.

Spring.kafka.bootstrap-servers=172.19.0.6:9092

Kafka’s IP Configuration, Change IP Address

Spring.data.mongodb.uri= mongodb://bizzan:123456 @172.19.0.6:27017/bitrade

Spring.data.mongodb.database=bitrade

Configuration of MongoDB

Sms.driver=diyi

Sms.gateway=

Sms.username=111111

Sms.password=xxxxxxxxxxxxxxxx

Sms.sign=BBEX

Sms.internationalGateway=

Sms.internationalUsername=

Sms.internationalPassword=

These are the main configurations, as well as the Accesskey & AccessSercurket configurations, redis, etc. of Ali Cloud OSS, nothing to say…

6.2 Packaging and Running of Front end

Since the system uses a separate front-end and back-end development mode, all front-ends request data from the service interface (Api) through Ajax.

Web_ Font Project

Modify the interface, in the main.jsfile. As for where main.js is, I won’t say anything, Or it feels like you’ll misunderstand that I’m insulting you ablity.

Vue.prototype.rootHost = " https://www.xxxx.io ";

Vue.prototype.host = " https://api.xxxx.io ";

Run in local developer mode, which is easy for you to debug

[ root@VM -0-8-centos 05_Web_Front]# NPM run dev

Package the project, you will see the results of your package in the dist folder under the project root directory, and throw them into the HTML directory of Nginx.

[ root@VM -0-8-centos 05_Web_Front]# NPM run build

Web_ Admin Project

Modify the interface in the http.js file:

Export const BASE URL = axios.defaults.baseURL = ' http://11.22.33.44

[=========]

: 6010/';

Run in local developer mode

[ root@VM -0-8-centos 04_Web_Admin]# NPM run dev

Package the project, you will see the results of your package in the dist folder under the project root directory, and throw them into the HTML directory of Nginx.

[ root@VM -0-8-centos 04_Web_Admin]# NPM run build

I hesitated to write the following sentence, and it really insults your ability: Don't throw the things packaged in the admin project into the HTML directory of the nginx that the font project packages in.

6.3 Running of Java MicroServices

Because you use Java’s SpringCloud micro-service architecture, you’ll see quite a few Java projects in your project. many people are obscure if they haven’t use it before. If you start reading this document, I’m sure you’ve prepared the front server. Let’s take a look at how so many jar packages are planned to run on the server:

Sever 1: Intranets such as WEB services: 172.22.0.13

Run Content: Front End (www.xxxx.com / api.xxxx.com) / Nginx (SSL Certificate Resolution) Nginx path: /usr/local/nginx/html

Server 2: Microservice Intranets such as Trading Engines: 172.22.0.3

Run Content 1: cloud.jar / exchange.jar / market.jar / ucenter.jar / exchange-api.jar / wallet.jar Run Content 2: nginx Microservice Forwarding (/usr/share/nginx/html)

Server 3: Intranets such as Kafka/DB/Redis database services: 172.22.0.12

Run Content 1: zookeeper / Kafka / mongodb / redis Run Content 2: admin.jar / Admin Front End Resource (/usr/share/nginx/html) Run Content 3:er Robot Market.jar / er Robot Normal.jar

Server 4: Intranets such as BTC/ETH Wallet Node Service: 172.22.0.14

Run Content: USDT Node / ETH Node / wallet_ Jar under RPC

Server 5:MySQL Cloud Server

Run Content: Business Database

Jar Microservice Startup Order

- cloud.jar – Micro Service Registry

- exchange.jar – Pinch Engine (waiting for cloud to start completely)

- market.jar – Quote engine (waiting for exchange to start completely)

- xxx.jar (other jar packages, waiting for the market to start completely)

6.4 Deployment of Robot

Robot Deployment

To understand the robot, first you need to know some trading logic yourself. This may require you to have bought and sold stocks or currency. If you have no basis at all, it is difficult to understand the robot. I suggest Baidu study or open an account to practice.

Robots, we can think of them as “real people”, but this person has eye problems, is quick to react, and can buy and sell from outside.

- Robots are “real people”, so you will definitely need an account with a default ID of 1.

- Robot accounts have a lot of money. If there is not enough money, you can’t buy and sell your own, because billing requires a lot of money.

- Robots need to refer to external conditions, so they need to “crawl” to collect conditions elsewhere. Robot engineering has two modules, er Market, er Robot Nor, the former acts as a “crawler” to get quotes from other exchanges. Or it’s a “real person”, which is based on er The market gets its price from its own sale.

Moduales in Robot

- Modify the configuration where er_Robot_normal, in addition to the configuration file, the class also needs to be modified – ExchangeRobotNormal.java & ExchangeRobotCustom.java.

- Run er_market.jar and er_robot_normal.jar.

- Register an ordinary user with an ID of 1 through the front end.

- Through background management, member management and viewing, the number of users with ID 1 should be given coins enough.

- Through the background management, currency management, currency settings list(there is a list of robots) , you can new the robot.

- Check the log of ‘er_market.jar’ whether the market data has been received.

- Check the log of ‘er_robot_normal.jar’ whether it has sent orders.

- Check the log of ‘exchange-api.jar’ whether the order has been received.

- Check the log of ‘exchange.jar’ whethera match request has been received.

- Check the log of ‘market.jar’ whether you receive matching results data.

6.5 App packaging of ios

App configuration and packaging of ios

Detailed reference:Packaging of ios app.pdf

6.6 Android packaging

App configuration and packaging of ios

Detailed reference:Packaging of Android.pdf

6.7 API doc

Api Documentation

Link address: https://www.showdoc.com.cn/1715922165251556/8106848541443080

Access code: 115599

7. Common problems

7.1 Invalid Microservices

Hope you can also pay attention to the following when opening ports, some ports open to the internal network server, don’t tell the world widely: Ports opening, come in, come in…. Hackers don’t care who you are. The following are the configurations that are mainly causing the port to be inaccessible

- Ali Cloud Security Group

- Tencent Cloud Security Group

- Server Firewall

- Pagoda port blockade

Some microservice ports

6001 6002 6003 6004 6005 6006 6007 6008 6009 6010 6011 6012 7000 7001 7002 7003 7004 7005 7006 7007 7008 7009 8801 10000 20000

Port for Basic Software

6379 3306 21707 9092

Wallet Node Port

8333 8334 … I can only remember so much, not enough for you to find it again.

7.2 Verification Code Error

Background management sometimes fails to log in. Either the verification code is wrong or the login cannot be performed through the second step. Open the debugging console of chrome, and we will see the following yellow warning:

A cookie associated with a cross-site resource at http://49.234.13.106/ was set without the `SameSite` attribute. It has been blocked, as Chrome now only delivers cookies with cross-site requests if they are set with `SameSite=None` and `Secure`. You can review cookies in developer tools under Application>Storage>Cookies and see more details at https://www.chromestatus.com/feature/5088147346030592 and https://www.chromestatus.com/feature/5633521622188032.

In general, the browser you use is chrome, and the version is very high, because chorme added the above configuration after version 77: samesite. If you do not configure this thing, you will be unable to carry cookies, so that the session cannot be used.

Solution reference: [new version of chrome cross domain problem: samesite attribute of cookie]( https://blog.csdn.net/qq_38527695/article/details/104899751?utm_medium=distribute.pc_relevant_t0.none -task-blog-BlogCommendFromMachineLearnPai2-1.channel_param&depth_1-utm_source=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.channel_param “New chrome cross domain problem: samesite attribute of cookie”)

The author provides a variety of solutions in this article, among which the samesite method of disabling Chrome browser is the fastest… After all, it’s just the problem of background management.

7.3 TCP Forwarding for nginx

This is not a general problem, but a problem I personally encountered in my development.

Background

For app socket push, port 28901 needs to be opened. This is automatically monitored on the microservice server, and app can also receive socket push. However, when I was developing the contract, I placed the jar of the contract on another server, so I listened to the port of another server. In order to unify the socket port, I need to use nginx for port forwarding.

Configuration method

The configuration of nginx port forwarding is very simple. Add the following fragment in nginx.conf file:

stream {

server {

listen 38901;

proxy_connect_timeout 2s;

proxy_timeout 10s;

proxy_pass 172.17.0.4:38901;

}

server {

listen 38985;

proxy_connect_timeout 2s;

proxy_timeout 10s;

proxy_pass 172.17.0.4:38985;

}

server {

listen 48901;

proxy_connect_timeout 2s;

proxy_timeout 10s;

proxy_pass 172.17.0.4:48901;

}

server {

listen 48985;

proxy_connect_timeout 2s;

proxy_timeout 10s;

proxy_pass 172.17.0.4:48985;

}

}

However, if nginx is required to support TCP forwarding, the stream module needs to be installed. The installation steps are as follows:

1. View nginx version module

[ root@VM_0_15_centos nginx]# nginx -V

nginx version: nginx/1.16.0

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-36) (GCC)

built with OpenSSL 1.0.2k-fips 26 Jan 2017

TLS SNI support enabled

configure arguments: --prefix=/etc/nginx --sbin-path=/usr/sbin/nginx --modules-path=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/var/run/nginx.pid --lock-path=/var/run/nginx.lock --http-client-body-temp-path=/var/cache/nginx/client_ temp --http-proxy-temp-path=/var/cache/nginx/proxy_ temp --http-fastcgi-temp-path=/var/cache/nginx/fastcgi_ temp --http-uwsgi-temp-path=/var/cache/nginx/uwsgi_ temp --http-scgi-temp-path=/var/cache/nginx/scgi_ temp --user=nginx --group=nginx --with-compat --with-file-aio --with-threads --with-http_ addition_ module --with-http_ auth_ request_ module --with-http_ dav_ module --with-http_ flv_ module --with-http_ gunzip_ module --with-http_ gzip_ static_ module --with-http_ mp4_ module --with-http_ random_ index_ module --with-http_ realip_ module --with-http_ secure_ link_ module --with-http_ slice_ module --with-http_ ssl_ module --with-http_ stub_ status_ module --with-http_ sub_ module --with-http_ v2_ module --with-mail --with-mail_ ssl_ module --with-stream --with-stream_ realip_ module --with-stream_ ssl_ module --with-stream_ ssl_ preread_ module --with-cc-opt='-O2 -g -pipe -Wall -Wp,-D_ FORTIFY_ SOURCE=2 -fexceptions -fstack-protector-strong --param=ssp-buffer-size=4 -grecord-gcc-switches -m64 -mtune=generic -fPIC' --with-ld-opt='-Wl,-z,relro -Wl,-z,now -pie' --with-stream

2. Download a compiled nginx of the same version

cd /opt

wget http://nginx.org/download/nginx-1.16.0.tar.gz

tar xf nginx-1.12..tar.gz && cd nginx-1.16.0

3. Back up the original nginx file

mv /usr/sbin/nginx /usr/sbin/nginx.bak

cp -r /etc/nginx{,.bak}

4. Recompile nginx

Find the existing modules according to step 1, and add the modules to be added this time: – with stream

cd /opt/nginx-1.12.

./configure --prefix=/etc/nginx --sbin-path=/usr/sbin/nginx --modules-path=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/var/run/nginx.pid --lock-path=/var/run/nginx.lock --http-client-body-temp-path=/var/cache/nginx/client_ temp --http-proxy-temp-path=/var/cache/nginx/proxy_ temp --http-fastcgi-temp-path=/var/cache/nginx/fastcgi_ temp --http-uwsgi-temp-path=/var/cache/nginx/uwsgi_ temp --http-scgi-temp-path=/var/cache/nginx/scgi_ temp --user=nginx --group=nginx --with-compat --with-file-aio --with-threads --with-http_ addition_ module --with-http_ auth_ request_ module --with-http_ dav_ module --with-http_ flv_ module --with-http_ gunzip_ module --with-http_ gzip_ static_ module --with-http_ mp4_ module --with-http_ random_ index_ module --with-http_ realip_ module --with-http_ secure_ link_ module --with-http_ slice_ module --with-http_ ssl_ module --with-http_ stub_ status_ module --with-http_ sub_ module --with-http_ v2_ module --with-mail --with-mail_ ssl_ module --with-stream --with-stream_ realip_ module --with-stream_ ssl_ module --with-stream_ ssl_ preread_ module --with-cc-opt='-O2 -g -pipe -Wall -Wp,-D_ FORTIFY_ SOURCE=2 -fexceptions -fstack-protector-strong --param=ssp-buffer-size=4 -grecord-gcc-switches -m64 -mtune=generic -fPIC' --with-ld-opt='-Wl,-z,relro -Wl,-z,now -pie' --with-stream

During the above compilation, if there is a lack of dependency, the following modules generally need to be installed and compiled again after installation:

> yum -y install libxml2 libxml2-dev libxslt-devel

yum -y install gd-devel

yum -y install perl-devel perl-ExtUtils-Embed

yum -y install GeoIP GeoIP-devel GeoIP-data

yum -y install pcre-devel

yum -y install openssl openssl-devel gperftools

5. Compile and continue verification

Continue entering: make After making, do not continue to enter “make install” to avoid problems with nginx After the above is completed, an nginx file will be generated in the objs directory. First verify:

/opt/nginx-1.16.0/objs/nginx -t

/opt/nginx-1.16.0/objs/nginx -V

6. Replace the nginx file and restart

cp /opt/nginx-1.16.0/objs/nginx /usr/sbin/

nginx -s reload

7. Inspection

[ root@pre ~]# nginx -V

https://www.bbsmax.com/A/rV57vx9RJP/7.4 Geth failed to send transaction

1. Problem phenomenon

Ethereum geth client is updated to geth v1.10.0. When sending the transaction again, the error is as follows:

err=”only replay-protected (EIP-155) transactions allowed over RPC”

2. Causes of problems

In geth v1.10.0, the method of accepting transactions through json-rpc is changed, and transactions without eip-155 provisions (i.e. chainid is not provided) are allowed to be prohibited. This version lays the foundation for banning unprotected transactions, but officials will continue to allow unprotected transactions until the next major version. This flag - require TX replay protectionhas been added and is set to false by default.

3. Problem solving

3.1 Temporary scheme

Run this parameter on its node: – RPC. Allow unprotected TXS, i.e. the following command: geth --rpcapi "db,eth,net,web3,personal,admin,miner" --rpc --rpcaddr "0.0.0.0" --cache 2048 --maxpeers 30 --allow-insecure-unlock --rpc.allow-unprotected-txs

Note: this scheme is because Ethereum developers realize that the person / tool publishing unprotected transactions cannot change overnight. Therefore, get v1.10.0 supports restoring to the old behavior. By accepting non eip155 transactions, the following parameters can be used: – rpc.allow-unprotected-txs However, please note that this is a temporary mechanism and will be deleted in the version after v1.10.0.

3.2 Permanent settlement

Add the chain ID to the transaction to prevent the transaction from being replayed on other chains

Note: Signature packages using web 3j require the latest version

Attention

Eth is updated frequently. If there is a problem, please refer to the upgrade log of the official GitHub to see if the problem is caused by the upgrade.

Appendix

Chainid list: https://chainid.network/

7.5 Vulnerability Fix of Apache Log4j

Due to major loopholes in Apache Log4j, it is recommended to upgrade log4j to version 2.15.x, but new loopholes appear in version 2.15.x, and it is recommended to upgrade to version 2.16.x, but there are loopholes in version 2.16.x, it is recommended to upgrade to 2.17.x.

In view of this, I provide a repair solution that does not need to upgrade log4j temporarily and can also solve the vulnerability in a short time, as follows:

Modify jvm parameters -Dlog4j2.formatMsgNoLookups=true;

Change setting log4j2.formatMsgNoLookups=True;

system environment variable FORMAT_MESSAGES_PATTERN_DISABLE_LOOKUPS is set to true

7.6 Add Trading Pair

Step 1. Add a new currency

- System management – currency management, add currency.

- Then click the [Add] button on the right to generate a user wallet asset record. This step is an asynchronous call to generate added currency assets for all users, and no information will be returned.

- Member management – view, observe whether the assets of the added currency have been added to the user’s asset record. If so, it means that the user’s assets have been added successfully.

Step2. Add new trading pair

- Currency management – currency settings, fill in various information, and add trading pairs.After the addition is successful, there will not be any quotations. If you need to generate transactions and quotations for the trading pair, you need to create a new robot. For a description of the robot, see another article in the [System Deployment] directory.

- Member management, view, recharge the robot account with id 1

- Currency management – currency settings, add robots, and set parameters.

- Observe the front-end market to see if there is an order issued.

7.7 Clean Log Space

未停止服务删除日志后空间未释放的解决方法

一、查看是否空间有异常占用

[root@VM-0-9-centos ~]$ df -h

文件系统 容量 已用 可用 已用% 挂载点

devtmpfs 7.8G 0 7.8G 0% /dev

tmpfs 7.8G 24K 7.8G 1% /dev/shm

tmpfs 7.8G 584K 7.8G 1% /run

tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup

/dev/vda1 99G 14G 82G 14% /

tmpfs 1.6G 0 1.6G 0% /run/user/0

二、查看空间占用,是否有无法访问的目录

[root@VM-0-9-centos ~]$ du -sh /*

du: 无法访问"/proc/13532/task/1499/fd/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/1603/fdinfo/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/1604/fdinfo/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/1947/fd/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/1948/fdinfo/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/1972/fd/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/2044/fdinfo/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/2193/fdinfo/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/2434/fd/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/2434/fdinfo/37": 没有那个文件或目录

du: 无法访问"/proc/13532/task/2849/fdinfo/37": 没有那个文件或目录

du: 无法访问"/proc/13532/task/19920/fdinfo/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/19921/fd/36": 没有那个文件或目录

du: 无法访问"/proc/13532/task/6236/fdinfo/37": 没有那个文件或目录

0 /proc

532K /root

556K /run

0 /sbin

4.0K /srv

0 /sys

104K /tmp

19G /usr

2.5G /var

三、查看已删除但仍被占用的文件及进程信息

[root@VM-0-9-centos ~\]$ lsof |grep deleted

java 20050 20864 root 164u REG 253,1 0 659358 /tmp/kafka-logs/__consumer_offsets-34/00000000000000000000.log (deleted)

java 20050 20864 root 165u REG 253,1 0 659364 /tmp/kafka-logs/__consumer_offsets-0/00000000000000000000.log (deleted)

java 20050 20864 root 166u REG 253,1 0 659379 /tmp/kafka-logs/__consumer_offsets-32/00000000000000000000.log (deleted)

java 20050 20864 root 167u REG 253,1 0 659390 /tmp/kafka-logs/__consumer_offsets-44/00000000000000000000.log (deleted)

java 20050 20864 root 168u REG 253,1 0 661940 /tmp/kafka-logs/__consumer_offsets-8/00000000000000000000.log (deleted)

java 20050 20864 root 169u REG 253,1 0 662882 /tmp/kafka-logs/__consumer_offsets-42/00000000000000000000.log (deleted)

java 20050 20864 root 170u REG 253,1 0 662900 /tmp/kafka-logs/__consumer_offsets-33/00000000000000000000.log (deleted)

四、找到进程

[root@VM-0-9-centos ~]$ ps -ef | grep 20050

五、杀掉进程,发现空间释放了

[root@VM-0-9-centos ~]$ kill -9 368480

六、重启刚才的进程

xxxxxxxxxxxx

du 更多使用方法

du -sh # 查看当前目录总占用空间

du -sh * # 查看当前目录下各目录或文件的占用空间情况

du -h --max-depth=1 # 仅查找一级

rm -rf xxx # 确认文件或目录不再使用时在删除8. Some Business Logic

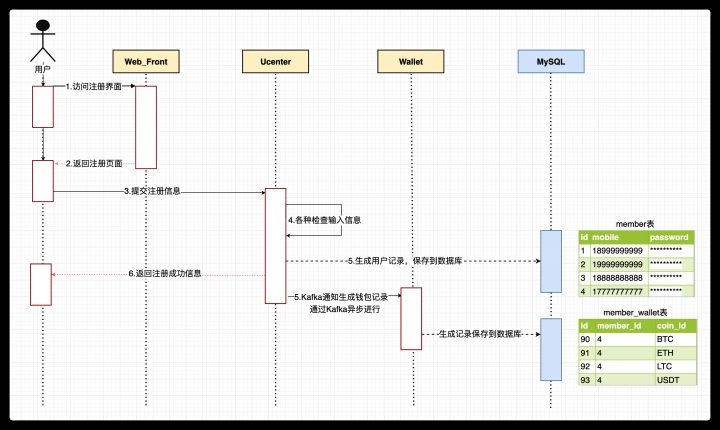

8.1 Logic of User registration

What are the possible problems?

For those who know programming, the user registration business logic can be supplemented by themselves. It must be to generate user records and some initialization records related to users (member table and member_wallet table). The fifth step to note here is to realize the asynchronous generation of wallet records through Kafka. Some students will encounter successful registration in the deployment process, but they do not generate [user wallet] records. Depending on their own asset management, there are two possibilities: either your wallet.jar does not run, Or your Kafka is not working properly.

Why use Kafka to generate records asynchronously?

At the beginning of the system, when users register, wallet addresses in BTC, Eth and other currencies will be generated. This is not only an operation that needs to call node RPC, but also a time-consuming operation. When many users register at the same time, it will become a performance bottleneck. Therefore, Kafka notification is used to realize asynchrony. Of course, I later optimized the business logic of this part. After the user is registered successfully, the wallet address is not generated immediately, but only when the user clicks the recharge button of a currency, which will greatly reduce the burden of the wallet node and reduce the unnecessary complexity for the operation and maintenance personnel to manage the wallet.

Significance of successful user registration

User registration is a key step to check whether your system is smooth. When you get through this step, it shows that your SMS, database, Kafka, ucenter-api.jar, wallet.jar, etc. are running normally, and you can safely carry out other more complex operations.